C++ Async Keystate Triggers Again With Delay.

I recently found myself explaining the concept of thread and thread pools to my team. We encountered a complicated threads-problem in our product environment that led us to review and analyze some legacy lawmaking. Although it threw me dorsum to the nuts, information technology was beneficial to review .Cyberspace capabilities and features in managing threads, which mainly reflected how .Net evolved significantly throughout the years. With the new options available today, tackling the production problem is much easier to cope with.

TL;DR

So, this circumstance led me to write this blog-post to go through the underlying foundations and features of threads and thread pools:

- Thread pool concept

- Examples for thread pools executions in .Internet

- Task Parallel Library features (some uncommon ones)

- Task-based Asynchronous Pattern (TAP)

The Threads .NET libraries are a vast topic, with many nuances; and so, to keep this commodity equally readable and concise as possible. Yet, I placed some links to external resource to delve more into some of the topics.

A Short Review of .NET Thread Pools

Let's start with a loftier-level review of threads; what is the incentive to use threads? Well, ultimately, it is freeing local resource and eliminate bottlenecks. The class Organisation.Threading.Threadis the nigh basic way to run a thread, just it comes with a price. The cosmos and destruction of threads incur high resource utilization, mainly CPU overhead. To avoid this penalization, which can exist expensive in terms of performance when threads are being created and destroyed extensively, .Net has presented the ThreadPoolgrade. This class allocates a certain number of threads and manages them for yous.

Although ThreadPoolhas advantagest is meliorate to stick to the skilful erstwhile Thread cosmos practice in some scenarios in some scenarios. It is specially relevant when y'all need finer control on the thread execution. For example, setting the thread to exist a foreground thread, the ThreadPool instantiates only background threads, so y'all should use the Thread object when a foreground thread is required (read hither almost the pregnant of background and foreground threads). Other scenarios are setting the thread's priority or aborting a specific thread. This depression-level control cannot be done if you use a ThreadPool object.

The Task library is based on the thread pool concept, simply let's review shortly other implementations of thread pools before diving into it before diving into it.

Creating Thread Pools

Past definition, a thread is an expensive operating system object. Using a thread pool reduces the performance penalisation by sharing and recycling threads; information technology exists primarily to reuse threads and to ensure the number of active threads is optimal. The number of threads can be set up to lower and upper premises. The minimum applies to the minimum number of threads the ThreadPoolmaintains, even on idle. When an upper limit is hit, all new threads are queuing until another thread is evicted and allows a new thread to start.

C# allows the cosmos of thread pools by calling on of the following:

- ThreadPool.QueueUserWorkItem

- Asynchronous consul

- Background worker

- Task Parallel Library (TPL)

A trivia comment: to identify whether a single thread is part of a thread pool or not, run the boolean property Thread.CurrentThread.IsThreadPool.

The ThreadPool Course

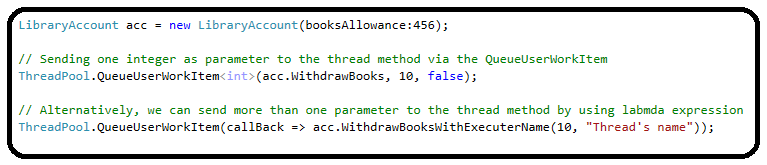

Using the ThreadPool course is the archetype clean approach to create a thread in a thread, calling ThreadPool.QueueUserWorkItem method volition create a new thread and add information technology to a queue. After queuing the work-item, the method will run when the thread pool is available; if the thread pool is occupied or reach its upper limit, the thread will wait.

The ThreadPoolobject can exist limit the number of threads running nether it; it tin exist configured by the calling ThreadPool.SetMaxThreadmethod. This method's executionmethod'due south execution is ignored(return Faux) if the parameters are lesser than the number of CPUs on the automobile. To obtain the number of CPUs of the machine, call Surroundings.ProcessorCount .

//threadPoolMax= Environment.ProcessorCount;

effect = ThreadPool.SetMaxThreads(threadPoolMax, threadPoolMax);

In essence, creating threads using the ThreadPool class is quite easy, but it has some limitations. Passing parameters to the thread method are quite a rigid way since the WaitCallbackconsul receives just one argument (an object); nevertheless, the QueueUserWorkItemhas an overload that can receive the generic value, but information technology is even so simply one parameter. Nosotros can use a lambda expression to bypass this limitation and send the parameters directly to the method of the thread:

Another missing feature in ThreadPoolcourse is signaling when all threads in the thread pool have finished. The ThreadPool class does not expose an equivalent method to Task.WaitAll(), so it must be done manually. The method below shows how to pause the main thread until all threads are done; it uses a CountdownEventobject to count the number of active threads.

Using the CountdownEvent object to identify when the execution of the threads has concluded:

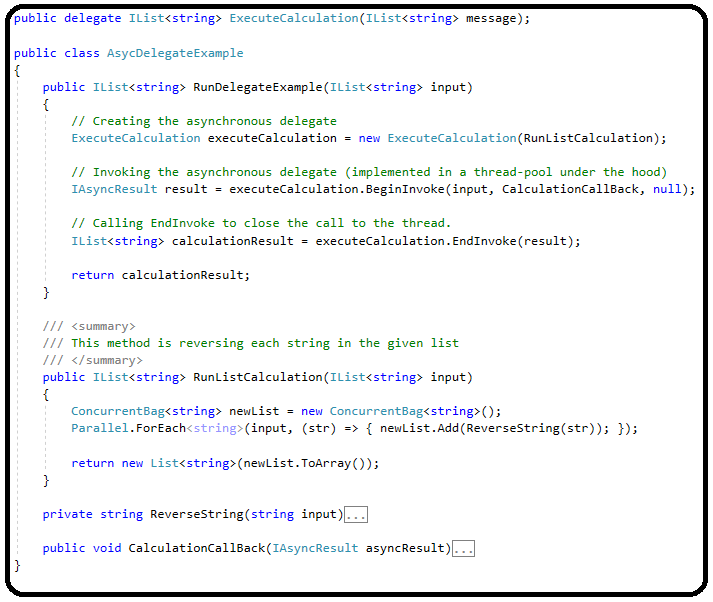

Asynchronous Delegates

This is the second mechanism that uses a thread pool; the .Net asynchronous delegates run on a thread pool nether the hood. Invoking a delegate asynchronously allows sending parameters (input and output) and receiving results more flexibly than using the Threadclass, which receives a ParameterizedThreadStart delegate that is more rigid than a user-divers delegate. The sample code beneath exemplifies how to initiate and run consul in an asynchronous way:

Another trivia fact: the asynchronous delegate feature is not supported in .NET Core; the organization will throw a Arrangement.PlatformNotSupportedExceptionexception when running on this platform. This is not a real limitation; there are other new ways to generate threads today.

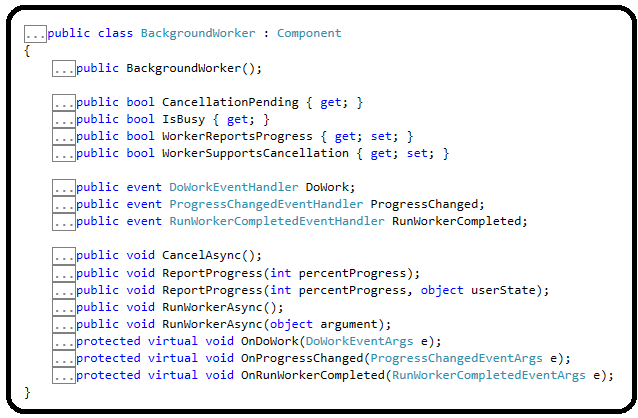

The Background Worker

The BackgroundWorker class (nether System.ComponentModel namespace) uses thread pool besides. Information technology abstracts the thread creation and monitoring process when exposing events that report the thread'south process, making this course suitable for GUI responses, for example, reflecting a long-running process that executes in the background. Past wrapping the System.Threading.Thread grade, it is much easier to collaborate with the thread.

The BackgroundWorkercourse exposes two primary events: ProgressChanged and RunWorkerCompleted; the first is triggered past calling the ReportProgressmethod.

After reviewing 3 means to run threads based on thread pools, let's swoop into the Task Parallel Library.

Task Parallel Library Features

The Task Parallel Library (TPL) was introduced in .Internet 4.0 every bit a pregnant improvement in running and managing threads compared to the existing System.Threading the library earlier; this was the large news in its debut.

In brusque, the Task Parallel Library (TPL) provides more efficient means to generate and run threads than the traditional Threadcourse. Backside the scenes, Tasks objects are queued to a thread pool. This thread puddle is enhanced with algorithms that determine and suit the number of threads to run and provide load balancing to maximize throughput. These features brand the Tasks relatively lightweight and handle effectively threads than before.

The TPL syntax is more friendly and richer than theThread library; for example, defining fine-grained parallelism is much easier than before. Among its new features, you tin find partitioning of the piece of work, taking intendance of state management, scheduling threads on the thread pool, using callback methods, and enabling continuation or cancellation of tasks.

The primary grade in the TPL library is Task; information technology is a higher-level abstraction of the traditional Thread class. The Task class provides more efficient and more straightforward ways to write and interact with threads. The Task implementation offers fertile ground for handling threads.

The bones constructor of a Task object instantiation is the consul Action, which returns void and accepts no parameters. We can create a thread that takes parameters and bypass this limitation with a constructor that accepts one generic parameter, only it is notwithstanding too rigid (Job(Activity<object> activity, object parameter). Therefore, an easier instantiation would be an empty delegate with the explicit implementation of the thread.

Allow's review some features the Task library presents:

- Tasks continuation

- Parallelling tasks

- Canceling tasks

- Synchronizing tasks

- Converging tasks back to the calling thread

Characteristic #1: Tasks Continuation

The Task implementation synchronizes the execution of threads easily, past setting execution order or canceling tasks.

Before using the Task library,we had to use callback methods, but with TPL, it is much more comfortable. A simple way to concatenation threads and create a sequence of execution can exist achieved by using Task.ContinueWith method. The ContinueWith method can exist chained to the newly created Task or defined on a new Task object.

Another benefit of using the the theContinueWithmethod is passing the previous task as a parameter, which enables fetching the outcome and processingprocessing it.

The ContinueWith method accepts a parameter that facilitates the execution of the subsequent thread, TaskContinuationOptions,that some of its continuation options I discover very useful:

- OnlyOnFaulted/NotOnFaulted (the continuing Task is executed if the previous thread has thrown an unhandled exception failed or non);

- OnlyOnCanceled/NotOnCanceled (the standing Task is executed if the previous thread was canceled or not).

You tin set up more than one selection past defining an OR bitwise operation on the TaskContinuationOptionsitems.

Besides calling the ContinueWith method, there are other options to run threads sequentially. The TaskFactoryform contains other implementations to continue tasks, for example, ContinueWhenAllor ContinueWhenAnymethods. The ContinueWhenAllmethod creates a continuation Task object that starts when a set of specified tasks has been completed. In contrast, theContinueWhenAny method creates a new chore that volition begin upon completing whatever task in the set up that was provided every bit a parameter.

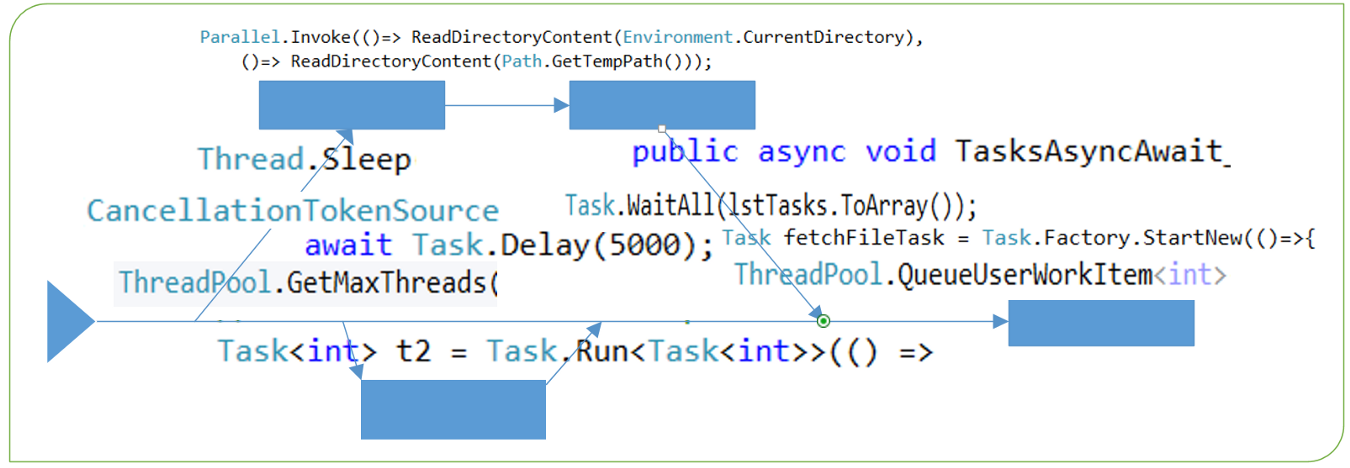

Characteristic #two: Parallelling Tasks

The Task Library allows running tasks in parallel after defining them together. The method Parallel.Invoke runs tasks given as arguments; the methodsTasParallel.ForEach and TasParallel.For run tasks in a loop.

This is an elegant approach to divide the execution resources; however, it may not exist the fastest mode to run your business organisation logic (encounter the caveats section at the stop of this article).

The exceptions during the execution of the methods For, ForEach, or Invoke are collated until the tasks are completed and thrown equally an AggregateException exception.

Feature #3: Cancelling Tasks

The framework allows canceling tasks from the exterior. It is implemented past injecting a cancellation token object to a task earlier its execution. This mechanism facilitates shutting downwards a thread gracefully after signaling a asking to cancel was raisedraised, as opposed to Thread.Arrest method that kills the thread abruptly.

Notwithstanding, there'due south a catchy office; the thread is aware of this cancellation simply when checking the property IsCancellationRequested, and thus it volition not work without checking this property's value deliberately. Moreover, the thread volition proceed its execution regularly and retain its Runningstatus until the thread ends, unless an exception is thrown.

The C# test method below demonstrates this flow while focusing on the thread's end condition and the Wait method. This is quite a long and convoluted case, so I highlight the important pieces:

Firstly, find the nuances of throwing exceptions inside the thread once the IsCancellationRequested equals True; if the exception is OperationCancelException, the thread's state becomes Cancelled, but if another exception is thrown, and then the thread'due south land is Faulted.

Secondly, the method Await can be overloaded with the cancellation token; when calling Wait(CancellationToken) , it returns when the token is canceled, regardless of throwing an exception, whereas calling the parameterless methodWait() returns but when the thread's execution has finished (with or without exception). This behavior also affects the exception handling flow.

To grasp this topic completely, y'all tin can play with the example below and manipulate its menstruation and run across how the land of the thread changes. This example is a bit long, simply it unfolds the different scenarios and results when canceling tasks. I used the xUnit MemebrData attribute to generate the diverse test scenarios.

Catching exceptions:

You lot may notice that when the Wait method was chosen, and an exception was thrown; the caught exception was AggregateException ; theWait collects exceptions into an AggregateException object, which holds the inner exceptions and the stack trace. That can be tricky when trying to unravel the truthful source of the exception.

Feature #4: Synchronising Tasks with TaskCompletionSource

This is another uncomplicated and useful feature for implementing an easy-to-use producer-consumer pattern. Synchronizing between threads has never been easier when using a TaskCompletionSource object; information technology is an out-of-the-box implementation for triggering a follow-upwardly action subsequently releasing the source object.

The TaskCompletionSource releases the Result task after setting the result (SetResult method) or canceling the task (uncomplicated SetCanceled or raising an exception, the SetException). Similar behavior can be achieved with EventWaitHandle ,which simplements the abstruse form WaitHandle; nevertheless, this pattern fits more for sequence operations, such assuch as, reading files, or fetching information from a remote source. Since we want to avoid holding our automobile'southward resource, triggering response is the more efficient solution.

The test method beneath exemplifies this blueprint; the second job execution continues but after setting the outset one.

Feature #5: Converging Back to The Calling Thread

On the aforementioned threads synchronization topic, the Chore library enables several ways to wait until other tasks have finished their execution. This is a pregnant expansion of the usage of theThread.Join concept.

The methods Look , WaitAll , and WhenAny are different options to hold the calling thread until other threads take finished.

The code snippet beneath demonstrates these methods:

The methods WhenAll and WhenAny are a convolution of the WaitAll and WaiAny; these methods create a new Task upon return.

There is a difference between Task.Wait and Thread.Join although both block the calling thread until the other thread has concluded. This difference relates to the fundamental difference between Thread and Task;the latter runs in the background. Since Thread, by default, runs every bit a foreground thread, it may not exist necessary to telephone call Thread.Join to ensure information technology reaches completion before closing the master application. Even so, there are cases the catamenia of the logic dictates a thread must be completed earlier moving on, and this is the place to call Thread.Bring together .

The Job library has many other capabilities that this commodity cannot include (I'one thousand trying to proceed you engaged). I'll exit you with ane last useful feature related to the others mentioned in the article: scheduling tasks; it is explained in-depth on .NET documentation.

The Differences Between Thread.Sleep() and Task.Delay()

In some of the examples in a higher place, I used Thread.Sleep or Task.Delaymethods to concur the execution of the primary thread or the side thread. Although it seems these 2 calls are equivalent, they are significantly different.

A call to Thread.Slumber freezes the current thread from all the executions since it runs synchronously, whereas calling to Chore.Delay opens a thread without blocking the current thread (information technology runs asynchronously).

If yous want the current thread to expect, you can telephone call await Task.Delay() that opens a thread and returns when it has finished (along with decorating the calling method with the async keyword, meet the next chapter). Again, it differs fromThread.Sleep that forces the current thread to halt all its executions.

Task-Based Asynchronous Blueprint (TAP)

Async and await are keyword markers to bespeak asynchronous operations; the await keyword is a not-blocking call that specifies where the code should resume later a task is completed.

The async/await syntax has been introduced with C# 5.0 and .Cyberspace Framework 4.five . Information technology allows writing asynchronous lawmaking that looks like synchronous code.

Merely how does async-expect manage to do information technology? Information technology'southward nothing magical, just a little bit of syntactic sugar that verifies nosotros receive the task's result when needed. The same result tin be achieved by using ContinueWith and Resultmethods; notwithstanding, information technology async/wait allows using the return value easily in different locations in the lawmaking. That is the reward of this pattern.

These two keywords were designed to go together; if we declare a method is async without having whatever look then it will run synchronously. Notation that a compilation error is raised when declaring await without mentioning the async keyword on the method (The 'await' operator tin only be used within an async method).

Avoiding Deadlocks When using async/await

In some scenarios, calling wait might crusade a deadlock since the calling thread is waiting for a response. A detailed explanation tin can exist found in this article (C# async-await: Common Deadlock Scenario).

As described in the commodity, an optional solution can be calling ConfigureAwait() method on the Chore. This instructs the task to avoid capturing the calling thread and just using the groundwork thread to return the result. With that, the result is prepare, although the calling thread is blocked.

ValueTask — Avoiding Creation of Tasks

C# 7.0 has brought some other evolvement to the Job library, the ValueTask<TResult> struct, that aims to improve operation in cases where there is no demand to allocate Task<TResult>. The benefit of using the struct ValueTask is to avoid generating a Task object when the execution can be washed synchronously while keeping the Task library API.

You can determine to return a ValueTask<TResult> struct from a method instead of allocating a Chore object if information technology completed its successful execution synchronously; otherwise if your method completed asynchronously, a Chore<TResult> object will be allocated wrapped with the ValueTask<TResult>. With that, you tin proceed the same API and care for ValueTask<TResult> as if it was Task<TResult>.

Keeping the same API is a significant advantage for keeping clean and consistent lawmaking. The ValueTask<TResult> tin can be awaited or converted to a Task object by calling AsTask method. However, there are some limitations and caveats when working with ValueTask. If you do not adhere to the behavior below, the results are undefined (based on .Net documentation):

- Calling

awaitmore than than one time. - Calling AsTask multiple times.

- Using

.Upshotor.GetAwaiter().GetResult()when the operation hasn't been completed yet or using them multiple times. - Using more one of these techniques to consume the instance.

You lot can read this article for further details.

To recap, when there is no need to allocate a new Task object, consider saving the cost of allocating it, and use ValueTask instead.

Caveat: Sometimes Threads Are Non The Ultimate Solution

After praising the usage of threads, there are some caveats before using Chore objects ubiquitously. Threads are not necessarily more efficient than synchronous calls. Some jobs do non benefit from threads, whilethe same tasks can be executed faster if done synchronously.

Firstly, the thread mechanism has an overhead, and thus information technology might not be an efficient solution. This overhead tin can eliminate the threading advantage for some short-running tasks. Not only the length of the execution matters but also its algorithm. If the algorithm is sequential, then it is not optimal to distribute its execution across multiple threads.

Secondly, machine limitations should not be overlooked when using threads, better use a machine with a multiple-coremultiple-core CPU, which is very common these days. The number of running threads should not exceed the number of available cores; otherwise, the performance will degrade.

Thirdly, if the threads are consuming the same resource, nosotros take shifted the problem to some other bottleneck. For instance, when our threads read from the same file organisation, they might exist limited by the I/O throughput or the target machines' RAM.

Last Words

Using threads is some other tool in your arsenal as a developer, and like whatever other tool, it should be used wisely. Information technology is a fundamental adequacy for executing logic; however, information technology comes with additional price, but not only resources during execution time.

Writing and implementing threads add complexity across software development lifecycle phases; you take to invest more in each SDLC stage: developing, testing, implementing, and monitoring. Investing in monitoring is crucial for maintaining your product effectively. Exercise not fail this stage, as yous may observe your resource drift to analyzing production logs, trying to figure out the flow of your logic in an intricate threads mesh.

Thank you for reading; I hope you detect this blog-post interesting; your comments and responses are well-nigh welcome.

Happy coding!

Opinions expressed past DZone contributors are their own.

C++ Async Keystate Triggers Again With Delay.

Source: https://dzone.com/articles/overview-of-c-async-programming-with-thread-pools